How to Buy a TV for Gaming in 2020

Display technology has come a long way in a decade. If you want a TV for video games, including a next-gen console and PC titles, your needs are quite different from the average shopper.

The Importance of HDMI 2.1

The next generation of consoles and high-end PC graphics cards is here. Sony and Microsoft are battling the PlayStation 5 and Xbox Series X, both of which sport HDMI 2.1 ports. NVIDIA has also unleashed its record-breaking 30 series cards with full support for HDMI 2.1.

So, what’s the big deal about this new standard?

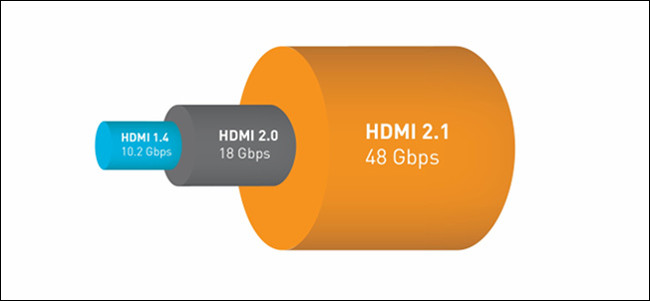

High-Definition Multimedia Interface (HDMI) is how your TV connects to consoles, Blu-ray players, and many PC graphics cards. HDMI 2.0b caps out at a bandwidth of 18 Gbits per second, which is enough for 4K content at 60 frames per second.

HDMI 2.1 enables speeds of up to 48 Gbits per second. This includes support for 4K at 120 frames per second (with HDR), or 8K at 60 frames per second. There’s also support for uncompressed audio, and a host of other features, like variable refresh rates (VRR), and automatic low-latency mode (ALLM) to minimize input lag.

Keep in mind, though, HDMI 2.1 is only worth it if a TV has a 120 Hz panel. Some TVs, like the Samsung Q60T, advertise HDMI 2.1 support, but only have a 60 Hz panel. That means they can’t take advantage of 120 frames per second because the display is only capable of 60 frames per second.

Do you need all that extra bandwidth? If you want to make the most out of the new consoles, you do. However, it’s unclear how many next-gen games will support 4K resolution at 120 frames. Microsoft announced that a handful of Xbox Series X titles will support 4K/120. The list includes Halo Infinite‘s multiplayer component (delayed until 2021), the eye-candy platformer Ori and the Will of the Wisps, Dirt 5, and Gears of War 5.

Most games from the previous generation ran at 30 frames per second, including big-budget first-party releases, like The Last of Us Part II, and third-party mainstays, like Assassin’s Creed. Microsoft improved on this with the Xbox One X by optimizing some games to run at 60 frames, instead.

Both the PS5 and Xbox Series X will target 4K 60 frames as a baseline. If you want to future-proof, shop for a 120 Hz display with HDMI 2.1 compatibility, even if the ports cap out at 40 Gbits per second (like some 2020 LG and Sony TVs and receivers). The 40 Gbits per second is enough for a 4K signal at 120 frames with full, 10-bit HDR support.

Even NVIDIA has unlocked 10-bit support on its 30 series cards. This allows displays with 40 Gbits per second to handle 120 frames at 4K with 10-bit without chroma subsampling (that is, making some channels omit certain color information).

If you’re staying with your PlayStation 4 or Xbox One for a bit, or you don’t need 120-frame-per-second gameplay, HDMI 2.0b is fine for now. It’s also fine if you’re getting the cheaper Xbox Series S, which targets 1440p, rather than full 4K.

Over the next few years, more and more models will support HDMI 2.1, and you’ll have more options, which means more opportunities to save money.

Variable Refresh Rate, Auto Low Latency Mode, and Quick Frame Transport

Some of the new HDMI 2.1 features are also available via the older HDMI 2.0b standard and have been implemented on TVs that don’t explicitly support HDMI 2.1.

Variable Refresh Rate (VRR or HDMI VRR) is a technology that rivals NVIDIA G-Sync and AMD FreeSync. While the latter are primarily for PC gamers, HDMI VRR is for consoles. Currently, only Microsoft has committed to this feature in the Xbox Series X and S, but the PlayStation 5 is also expected to support it.

VRR is designed to prevent screen tearing, which is an unsightly side effect of a console that can’t keep up with the refresh rate of the display. If the console isn’t ready to send a full frame, it sends a partial one instead, which causes a “tearing” effect. When the refresh rate is in unison with the frame rate, tearing is all but eliminated.

Auto Low Latency Mode (ALLM) is an intelligent method of disabling processing to reduce latency when playing games. When the TV detects ALLM, it automatically disables features that could introduce latency. With ALLM, you don’t have to remember to switch to Game mode for the best performance.

Quick Frame Transport (QFT) works with VRR and ALLM to further reduce latency and screen tearing. QFT transports frames from the source at a higher rate than existing HDMI technology. This makes games seem more responsive.

All devices in the HDMI chain need support for these features to work, including AV receivers.

Let’s Talk Latency

While shopping for a new TV, you’ll likely see two similar-sounding terms that refer to different things: latency (or lag) and response time.

Latency is the time it takes for the display to react to your input. For example, if you press a button to jump on the controller, the latency is how long it takes for your character to jump on-screen. Lower latency can give you the edge in competitive multiplayer games or make fast-paced, single-player games more responsive.

This delay is measured in milliseconds. Generally, a latency of 15 ms or less is imperceptible. Some high-end TVs get this down to around 10 ms, but anything below 25 ms is usually good enough. How important this is depends entirely on the sort of games you play.

Response time refers to pixel response. This is how long it takes a pixel to change from one color to another, usually quoted in “gray-to-gray” performance. This is also measured in milliseconds, and it’s not unusual for high-end displays to have a pixel response of 1 ms or better. OLED displays, in particular, have an almost instantaneous response time.

Many premium and flagship TVs have good latency and response times. Budget TVs can be hit or miss, so make sure you do your research before purchasing. Over at RTINGS, they test for latency and list all review models by input lag if you want to see how the one you’re considering stacks up.

FreeSync and G-Sync

Variable refresh rates eliminate screen tearing by matching the refresh rate of the monitor to the frame rate of the source. On a PC, that’s a graphics card or GPU. Both Nvidia and AMD have proprietary technologies that deal with this issue.

G-Sync is Nvidia’s variable-refresh-rate technology, and it requires a hardware chip on the display. It only works with Nvidia graphics cards, though. If you have an Nvidia GTX or RTX card you want to use with your new TV, just make sure it has G-Sync support.

There are currently the following three tiers of G-Sync:

- G-Sync: Provides tear-free gaming in standard definition.

- G-Sync Ultimate: Designed for use with HDR up to 1,000 nits brightness.

- G-Sync Compatible: These are displays that lack the prerequisite chip, but still work with regular G-Sync.

FreeSync is AMD’s equivalent technology, and it works with AMD’s Radeon line of GPUs. There are three tiers of FreeSync, a well:

- FreeSync: Removes screen tearing.

- FreeSync Premium: Incorporates low-frame-rate compensation to boost low frame rates. It requires a 120 Hz display at 1080p or better.

- FreeSync Premium Pro: Adds support for HDR content up to 400 nits.

Many TVs that support G-Sync will also work with FreeSync (and vice versa). Currently, there are very few TVs that explicitly support G-Sync, notably, LG’s flagship OLED lineup. FreeSync is cheaper to implement because it doesn’t require any additional hardware, so it’s widely found on more affordable displays.

Since AMD is making the GPUs inside both the Xbox Series X/S and the PlayStation 5, FreeSync support might be more important for console gamers this generation. Microsoft confirmed FreeSync Premium Pro support for the upcoming Series X (in addition to HDMI VRR), but it’s unclear what Sony is using.

Consider Where You’ll Be Playing

There are currently two main panel types on the market: LED-lit LCDs (including QLEDs) and self-lit OLEDs. LCD panels can get much brighter than OLEDs because OLED is a self-emissive organic technology that’s more susceptible to permanent image retention at high brightness.

If you’re going to be playing in a very bright room, you might find an OLED simply isn’t bright enough. Most OLED panels are subject to auto-brightness limiting (ABL), which reduces the overall screen brightness in well-lit scenes. LCD panels aren’t susceptible to this and can get much brighter.

If you play mostly during the day in a room full of windows with lots of ambient lighting, an LCD might be the better choice. However, in a light-controlled room at night with subtle lighting, an OLED will give you the best picture quality.

Generally, OLEDs provide excellent image quality due to their (theoretically) infinite contrast ratio. QLED models (LED-lit LCDs with a quantum dot film) have higher color volume, meaning they can display more colors and get brighter. It’s up to you to decide which better suits your budget and gaming environment.

OLED Burn-in

OLED panels are susceptible to permanent image retention, or “burn-in.” This is caused by static content, like scoreboards or TV channel logos, remaining on-screen for an extended period. For gamers, this also applies to HUD elements, like health bars and mini maps.

For most people, this won’t be a problem. If you vary your content consumption and wear down the panel, you likely won’t encounter burn-in. Also, if you play a variety of games, this won’t be much of an issue.

Who it could be a problem for is people who play the same game for months, especially if it’s heavy on HUD elements. One way of cutting down your risk of burn-in is to enable HUD transparency or disable the HUD altogether. Of course, this isn’t always possible or desirable.

Many OLED TVs now include burn-in reduction measures, like LG’s Logo Luminance feature, which dims the screen when static content is displayed for two minutes or more. This should help keep burn-in at bay.

For PC gamers who use a TV as a monitor (with taskbars and desktop icons on-screen), an OLED probably isn’t the best choice. Any amount of static image poses a burn-in risk. Unless you’re using a display solely for playing games or watching movies, you might want to consider a high-end LCD panel, instead.

Not all burn-in is noticeable during real-world use. Many people only discover it when they run test patterns, including color slides. Unfortunately, most warranties, particularly from manufacturers, don’t cover burn-in. If you’re concerned and still want an OLED, consider getting an extended warranty from a store like Best Buy that explicitly covers this issue.

RELATED: OLED Screen Burn-In: How Worried Should You Be?

HDR, the HDR Gaming Interest Group, and Dolby Vision

HDR gaming is about to become mainstream with the release of the PlayStation 5 and Xbox Series X/S. With both platforms supporting HDR in some form, you’ll want to make sure your next TV is at least HDR10 compliant, so you’ll get richer, brighter, and more detailed images.

The HDR Gaming Interest Group (HGIG) formed in an attempt to standardize HDR gaming via the HGIG format. Games must be certified for HGIG support. The format is expected to take off with the arrival of next-generation games, so it’s probably worth it to look for an HDR TV with HGIG support.

Both the Xbox Series X and S will also have support for Dolby Vision HDR, which is yet another format. Unlike HDR10, which uses static metadata, Dolby Vision uses dynamic metadata on a scene-by-scene basis. Currently, content mastered in Dolby Vision can go up to 4,000 nits peak brightness, although no consumer displays can yet reach those levels.

To use Dolby Vision on your new Xbox, you’ll have to have a TV that supports it. Manufacturers like LG, Vizio, HiSense, and TCL all produce TVs with Dolby Vision support. Samsung, however, has shunned the format in favor of HDR10+. If you’re getting a next-gen Xbox, though, keep in mind that games will explicitly need to support the feature.

RELATED: HDMI 2.1: What’s New and Do You Need to Upgrade?

The Next Generation of Gaming

This has been a turbulent year for most, so the arrival of next-generation consoles and graphics cards is even more exciting than usual. It’s also not a bad time to upgrade your TV, particularly if you’ve put off getting a 4K set so far.

The price of LG’s OLEDs has significantly dropped over the last few years. Quantum Dot films are now found in budget $700 LCD sets, which means you can have a bright, colorful image without shelling out thousands.

Coming soon will be even more price cuts, 120 Hz panels, mini-LED TVs, and widespread adoption of HDMI 2.1.

Leave a reply